Tutorial

Prerequisite: Python

Python is a powerful programming language ideal for scripting and rapid application development. It is used in web development (like: Django and Bottle), scientific and mathematical computing (Orange, SymPy, NumPy) to desktop graphical user Interfaces (Pygame, Panda3D).

This tutorial introduces you to the basic concepts and features of Python 3. After reading the tutorial, you will be able to read and write basic Python programs, and explore Python in depth on your own.

Basic Operations

Following the instruction, you will learn how to open an RGB camera, how to read and save a picture.

Open “OpenCVBasic” folder

- Open Camera

- Connect two cameras to the Raspberry Pi through USB 3.0 ports

- Run “openCamera.py”

- Press “q” to exit

- Change “cap = cv2.VideoCapture(0)” to “cap = cv2.VideoCapture(2)”

- Run “openCamera.py” again

- Press “q” to exit

- Save images

- Run “capture.py”

- Press “c” to capture an image

- Press “q” to exit

- Check the captured image “pic.png” in the same folder

- Read & write images

- Run “ReadAndWrite_img.py”

- Check difference between two images

- Press “q” to exit

- Check “pic.png” and“picture1.jpg” in the same folder

Color Extraction

Following the instruction, you will learn how a specific color is selected and extracted from an image.

Open “OpenCVBasic” folder

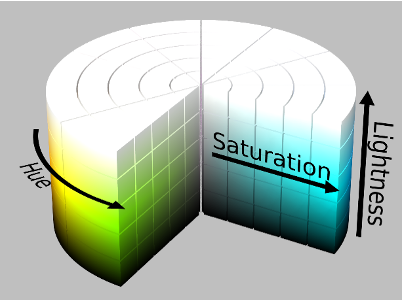

- HSV Color Space

- Run “colorSelection_HSV.py”

- Set “S” and “V” value to “255”

- Set “H” value from “0” to “255”

- Try different combinations of “H”, “S”, “Vs” value

- Press “q” to exit the program.

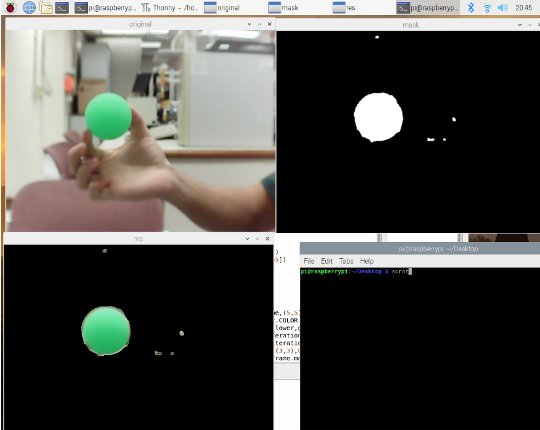

- Color Extraction

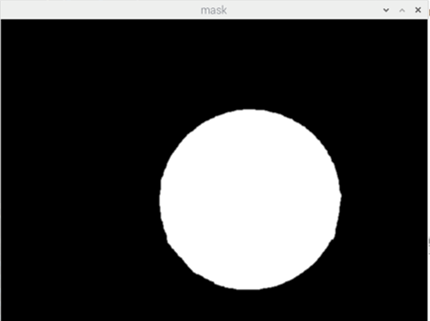

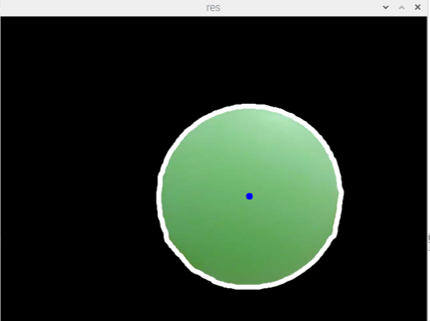

- Run “colorExtraction.py” in the terminal. Put a green ball in the front of the camera. You should see three windows “original”, “mask” and “res” as shown below.

- Press “s” to save three images of the windows respectively.

- Create a contour

- Run "colorExtractionTracking.py"

HSV (hue, saturation, value) is an alternative representation of the RGB color model, designed in the 1970s by computer graphics researchers to more closely align with the way human vision perceives color-making attributes.

In this model, colors of each hue are arranged in a radial slice, around a central axis of neutral colors which ranges from black at the bottom to white at the top.

The HSV representation models the way paints of different colors mix together, with the saturation dimension resembling various tints of brightly colored paint, and the value dimension resembling the mixture of those paints with varying amounts of black or white paint.

In this section, we will learn how to extract green color from the video input from the RGB camera

From the previos lab session, we have already learnt how to create a contour.

The next step is to locate the contours and represent them visually with

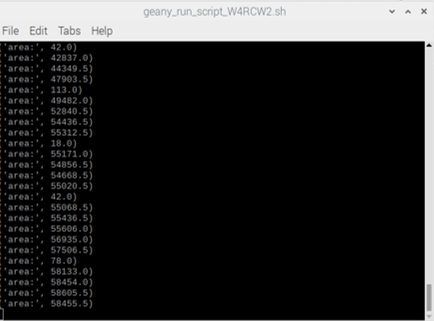

_, contours, _ = cv2.findContours(mask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) .We can find the area of the contour with:

area = cv2.contourArea(c).The area of each contour is displayed on the terminal.

The center of a contour can be obtained by using

cv2.moments(c).

Camera Calibration

In this section, you will learn the basic knowledge about camera calibration, and calibrate two cameras by yourself.

Open “StereoCallibration” folder

- Camera distortion

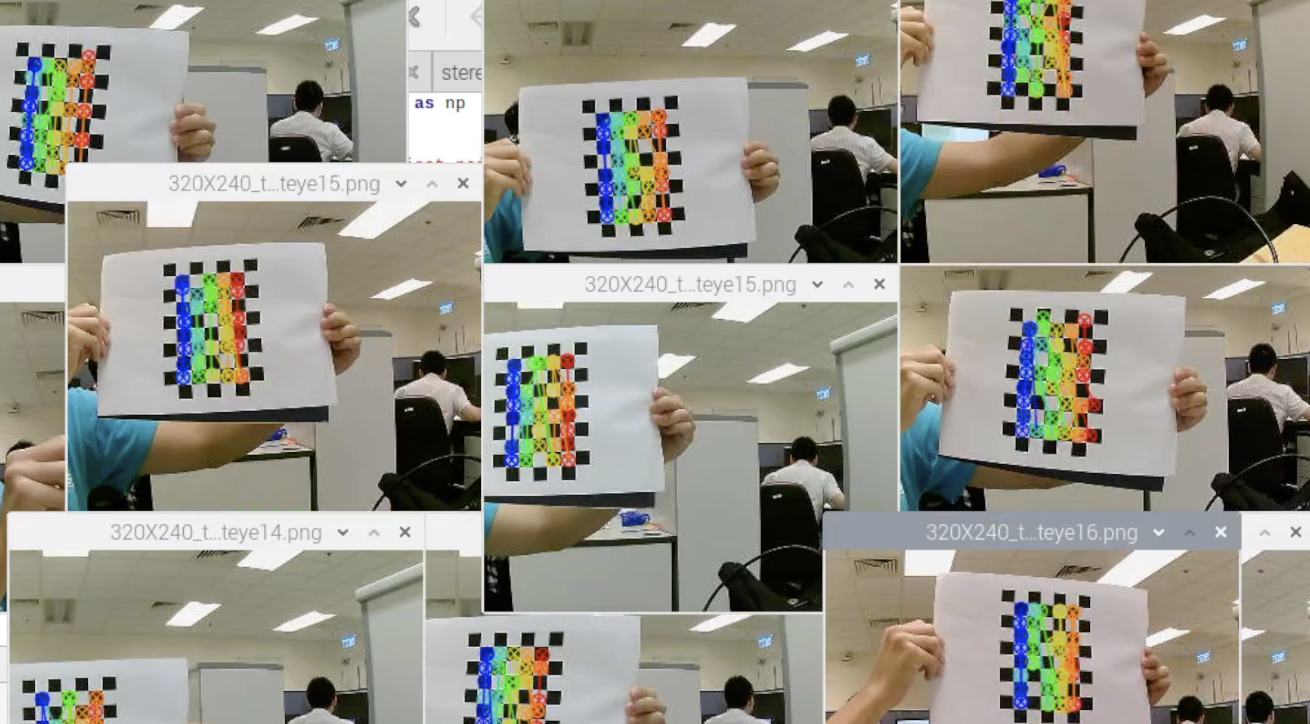

- Capture images of calibration board

- Run “capture_two(ste).py”

- Place the calibration chess board in the front of two cameras. Make sure two cameras can both see the board completely.

- Press “c” to capture images.

- Change the position and orientation of the chess board, repeat step (2)-(4) at least 20 times to capture enough images for calibration

- Press “q” to exit

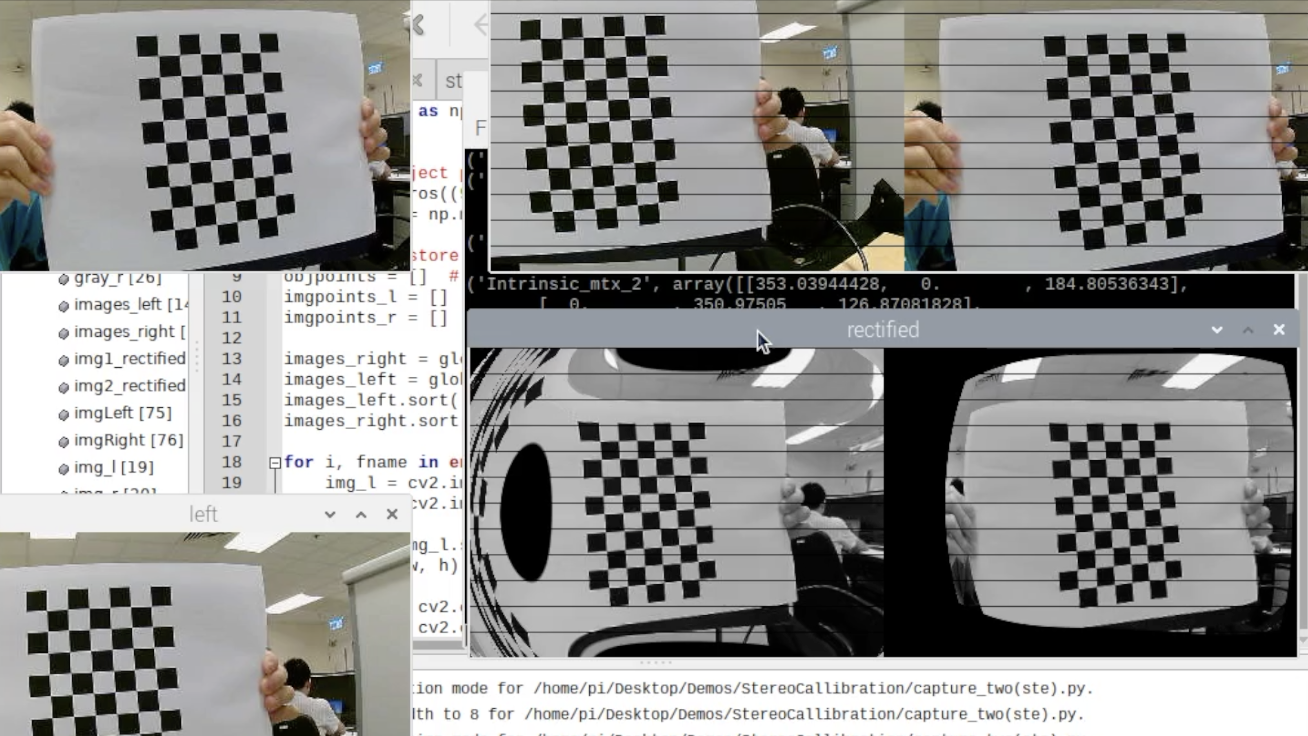

- Calculate and record distortion and camera intrinsic parameters

- Run “stereo_callibration.py”

- Record the printed results, including

- Left camera intrinsic parameters a 3x3 Matrix (Following ‘Intrinsic_mtx_left(K_left)’)

- Left camera distortion parameters 1x5 Vector (Following 'dist_left’)

- Right camera intrinsic parameters 3x3 Matrix (Following ‘Intrinsic_mtx_left(K_right)’)

- Right camera distortion parameters (1x5 Vector (Following 'dist_right’)

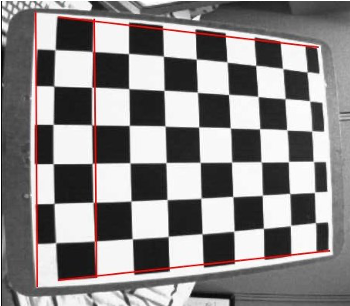

Two major distortions are radial distortion and tangential distortion.

Due to radial distortion, straight lines will appear curved.

Its effect is more as we move away from the center of image.

For example, one image is shown below, where two edges of a chess board are marked with red lines.

But you can see that border is not a straight line and doesn’t match with the red line. All the expected straight lines are bulged out.

This distortion is solved as follows:

Similarly, another distortion is the tangential distortion which occurs because image taking lenses are not aligned perfectly parallel to the imaging plane. So some areas in image may look nearer than expected. It is solved as below:

In short, we need to find five parameters, known as distortion coefficients, given by:

For stereo applications, these distortions need to be corrected first. To find all these parameters, what we have to do is to provide some sample images of a well-defined pattern (e.g., chess board). We find some specific points in it (square corners in chess board). We know its coordinates in real world space and we know its coordinates in image. With these data, some mathematical problem is solved in background to get the distortion coefficients. That is the summary of the whole story.

For better results, we need at least 20 test patterns.

In addition to this, we need to find a few more information, like intrinsic parameters of a camera.

** If it is not working well, switch the camera id:

cv2.VideoCapture(0) to cv2.VideoCapture(2) and cv2.VideoCapture(2) to cv2.VideoCapture(0).**

Disparity Map

In this section, you will learn how to create a depth map from stereo images.

Open “DisparityMap” folder

- Background knowledge of disparity map

- Disparity map with OpenCV

- Run “capture_two(dis).py”.

- Press “c” to capture and save images.

- Press “q” to quit.

- Open “DisparityMap.py” file.

- Check line 9 to line 20, change values of camera intrinsic and distortion parameters to the values you just calibrated, save the file.

- Run “DisparityMap.py”.

- Press any key to exit.

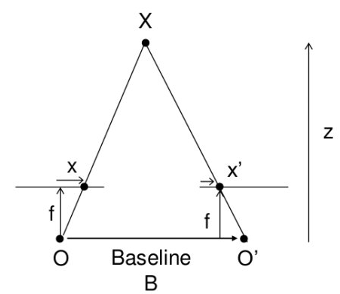

If we have two images of same scene, we can get depth information from that in an intuitive way. Below is an image and some simple mathematical formulas which prove that intuition.

The above diagram contains equivalent triangles. Writing their equivalent equations will yield us following result:

x and x′ are the distance between points in image plane corresponding to the scene point 3D and their camera center. B is the distance between two cameras (which we know) and f is the focal length of camera (already known). In short, the above equation says that the depth of a point in a scene is inversely proportional to the difference in distance of corresponding image points and their camera centers. With this information, we can derive the depth of all pixels in an image.

So it finds corresponding matches between two images. We have already seen how epiline constraint make this operation faster and accurate. Once it finds matches, it finds the disparity. Let's see how we can do it with OpenCV.

** This code only works with Python 2 **

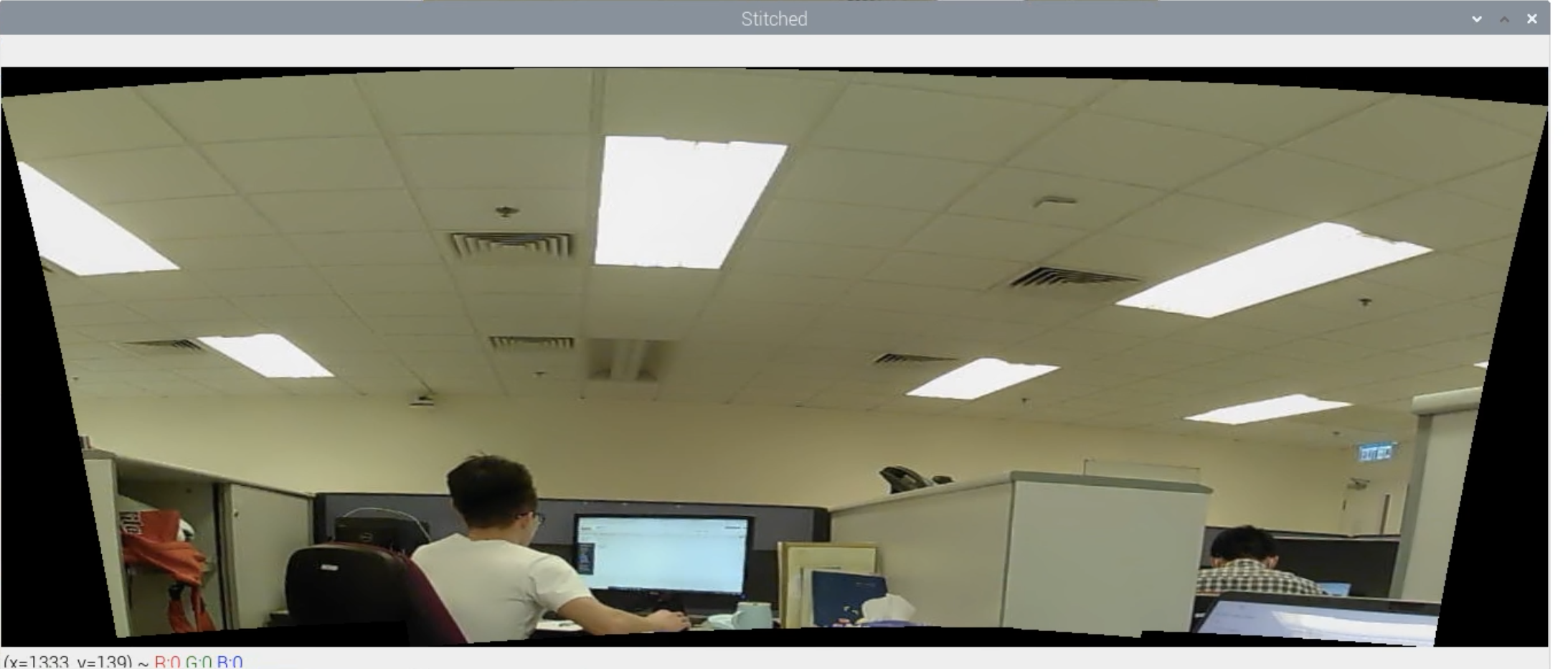

Image Stitching

Background: Image stitching or photo stitching is the process of combining multiple photographic images with overlapping fields of view to produce a segmented panorama or high-resolution image. In order to estimate image alignment, algorithms are needed to determine the appropriate mathematical model relating pixel coordinates in one image to pixel coordinates in another. Algorithms that combine direct pixel-to-pixel comparisons with gradient descent (and other optimization techniques) can be used to estimate these parameters. Distinctive features can be found in each image and then efficiently matched to rapidly establish correspondences between pairs of images. When multiple images exist in a panorama, techniques have been developed to compute a globally consistent set of alignments and to efficiently discover which images overlap one another. A final compositing surface onto which to warp or projectively transform and place all of the aligned images is needed, as are algorithms to seamlessly blend the overlapping images, even in the presence of parallax, lens distortion, scene motion, and exposure differences.

Alcatraz Island, shown in a panorama created by image stitching

Function: Stitch two images captured from camera into one complete image.

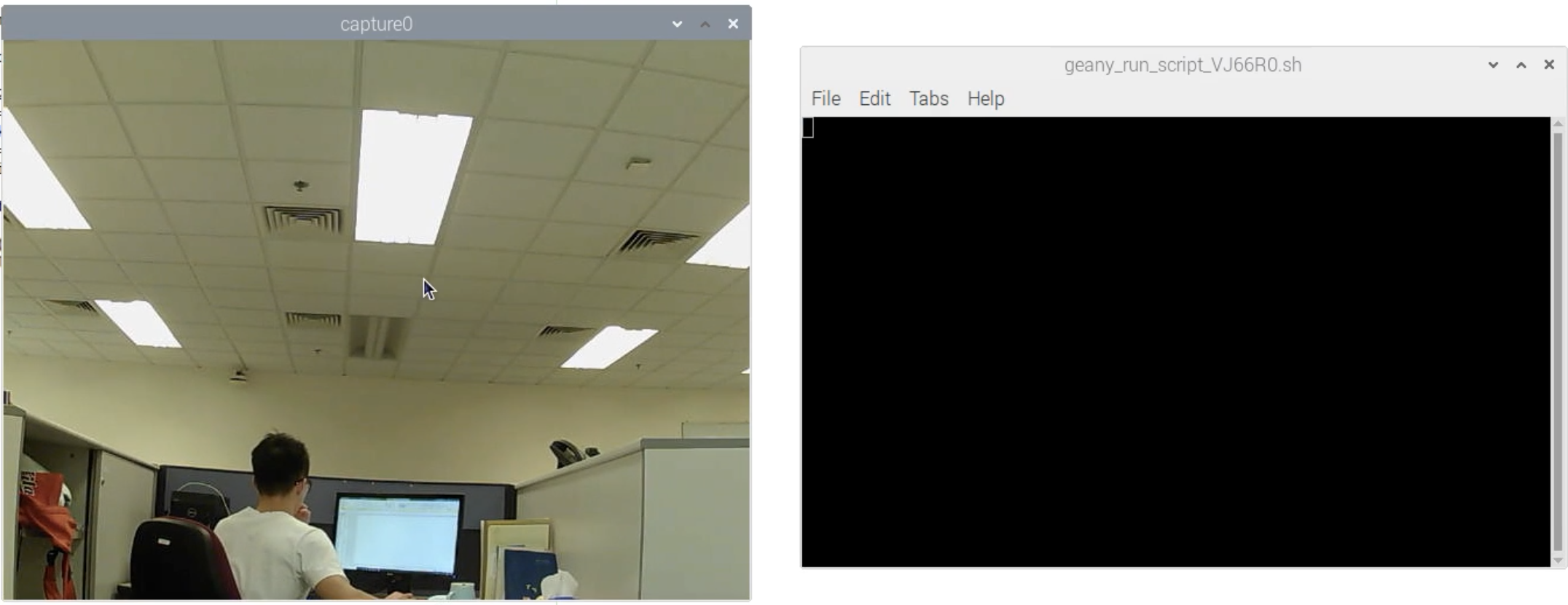

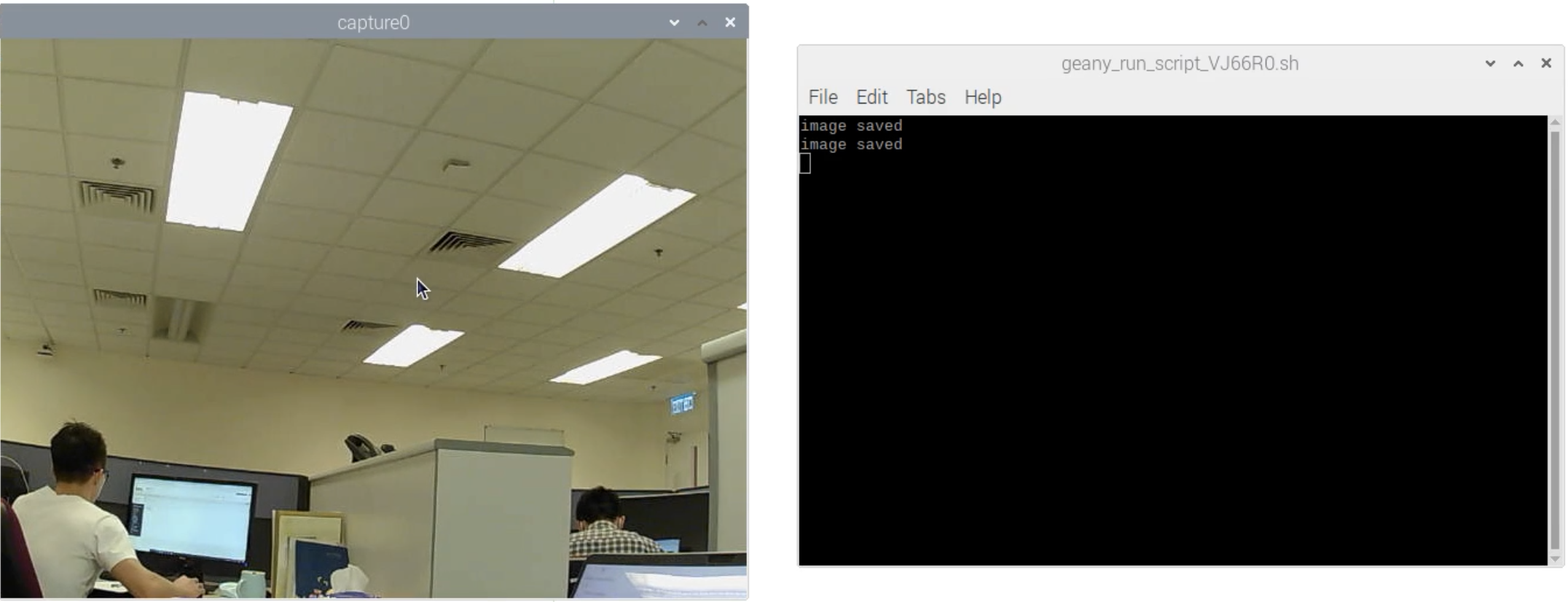

- Run takePictures.py under imgStitching folder to take two images first.

- Keep your cursor focus on the capture0 window, press 's' to take the first image then the right terminal panel will print 'image saved', and then change the direction of your camera slightly and press 's' to take the second image.

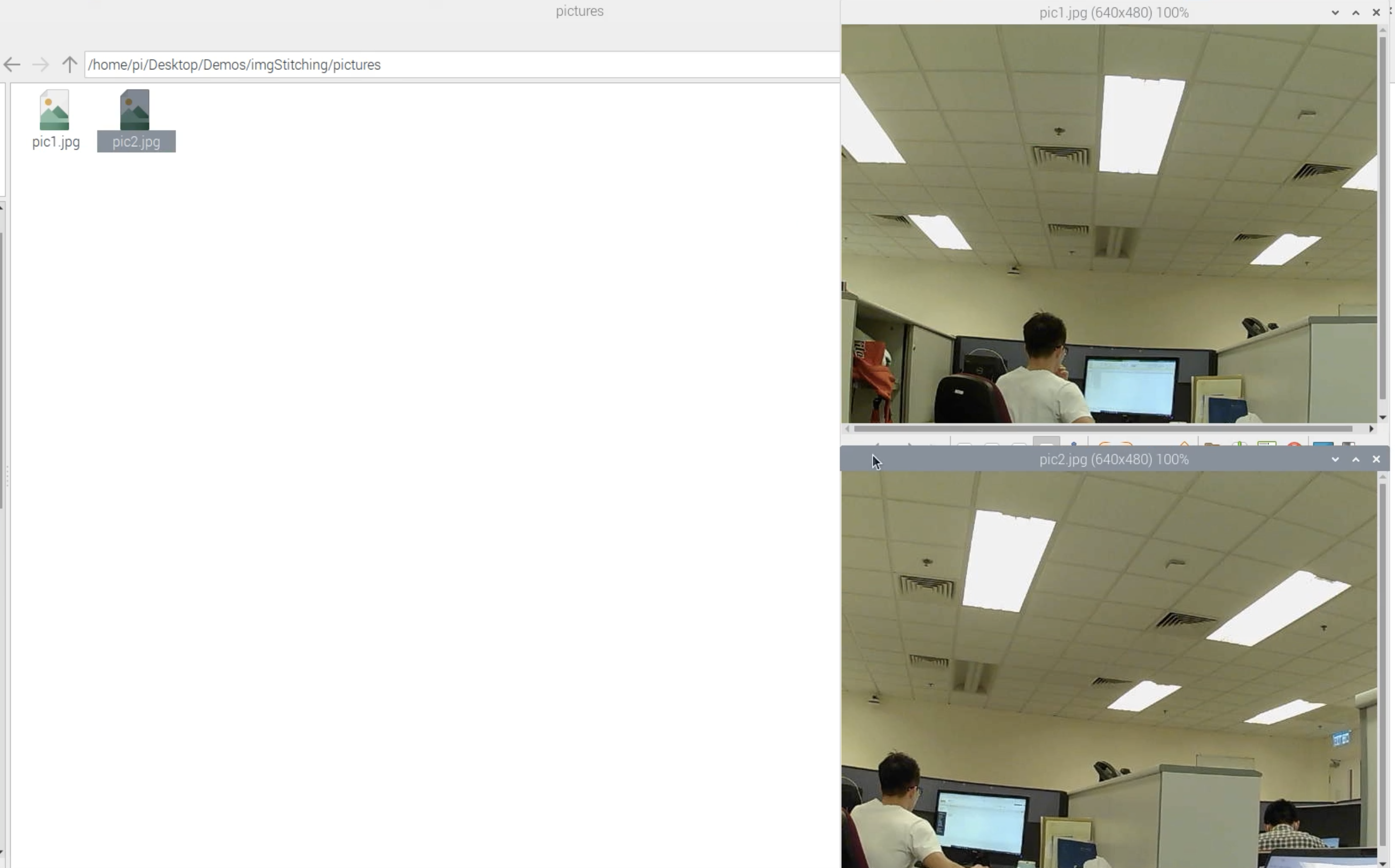

- The newly captured images locate in the pictures folder.

- Run imgStitch.py using predefined python3 command to generate the stitched image. ** This code only works with Python 3 **

To execute the script with Python 3: Click "ExecutePy3" under Build menu.

Rectification

- Copy all the images captured in the previous Camera Calibration practice done above (/StereoCallibration/320X240_twoeyes_calibration) into the image folder of (/Rectified/320X240_twoeyes)

- Run rectify.py and start to calculate the parameters needed for rectification.

- Finally, the program select a pair of images respectively captured from left camera and right camera to calculate the rectifed image. ** If there is no response from the Terminal window after a while, you may press "Enter" on the keyboard. **